Challenges to securing URLs in SMS – Flubot and Smishing Solutions Part 5/7

What do FluBot and SMS phishing have in common? Shocking daily headlines, the Wild West of URLs, and one easy solution to stop both in their tracks.

If you have heard anything about the newest Flubot attack, you have probably also warned your parents and others not to trust or click any link from an SMS message. This is bad news for MNOs. Fraud has been on a steep incline since the COVID-19 pandemic began, and consumers, while under attack from the virus, are also under attack electronically and financially.

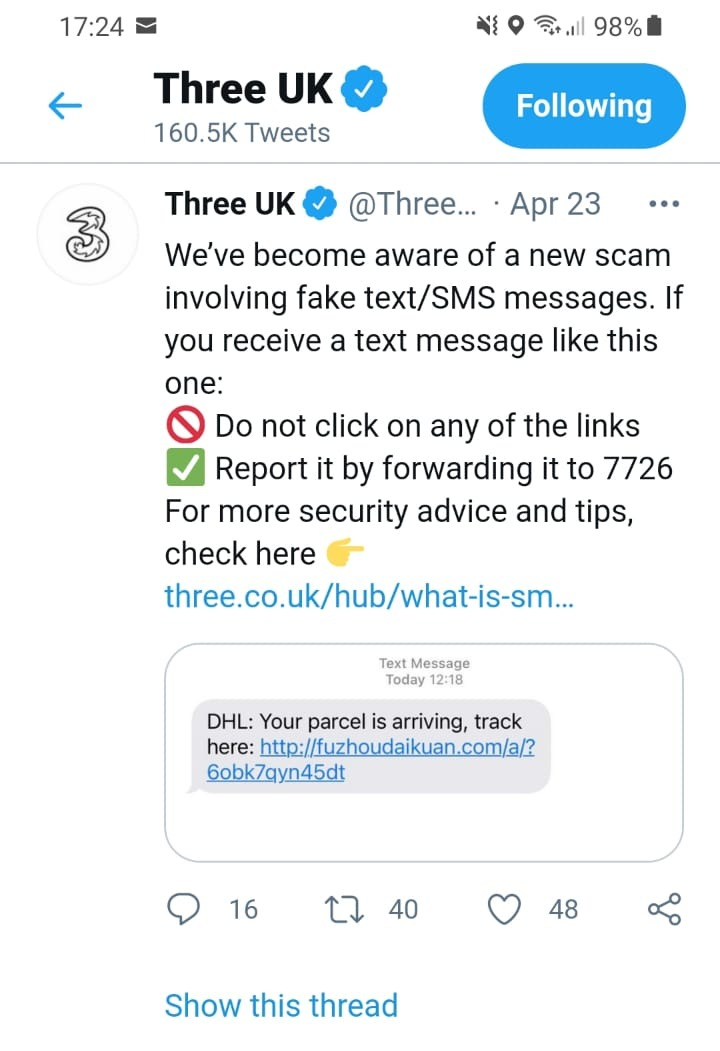

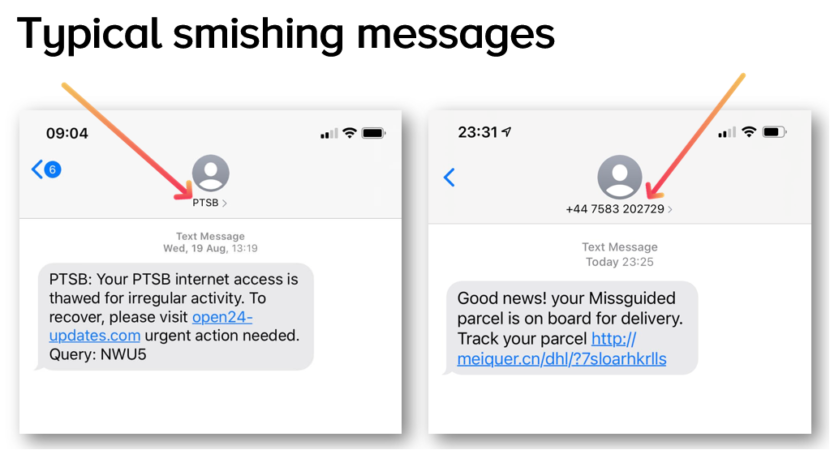

Smishing, malware, and the Flubot are dominating headlines. These attacks may seem like separate threats, but they have a lot in common: They both begin with an SMS message and end in devastating financial losses to subscribers. Mobile operators have responded by issuing warnings to consumers, yet they are missing an enormous opportunity to save the day and savour the glory (and press coverage) any hero should.

Challenges to securing URLs in SMS

Many solutions are proposed to this problem, but frankly, none of them are working well enough. Malicious URLs are still responsible for over 90% of all cyberattacks. Each solution addresses part of the problem, and while none are in-effective in what they aim to do (like hand-washing, mask-wearing, and social distancing), these measures slow the problem but are far from a real cure.

Spam filters and SMS firewalls

An SMS firewall is an excellent tool in combating all these attacks. Spam filters and SMS firewalls are built to identify and block various types of SMS fraud such as spam and number spoofing, which contribute to the volume and efficacy of an attack, however an SMS Firewall alone cannot verify the security of any website URL contained in the message without an external query.

Even the best SMS firewalls will not catch all malicious SMS message, especially those sent at low volumes, or with fresh tricks to get around current content filtering policies. A few will always sneak through, and the real threat is not in the message, but the URL itself. A firewall is only as good as its ruleset, so firewalls that are not continually updated or effectively maintained in the face of emerging threats are no solution at all.

Blocklists

Blocking access to known fraudulent websites seems like a very common-sense approach to protect subscribers, and of course, should be done – however when we look into what it takes for a malicious URL to get to that blocklist, we see this is far from a solution. The first thing it takes to get to that blocklist is time itself.

According to Google, criminals only need their deceptive URL to be up and running for 7 minutes before achieving their goal in a targeted attack on a person or company, and 13 hours for a bulk campaign. In practice, all security vendors take 2-3 days average to investigate and block new dangerous domains.

It is estimated that a new phishing site is set up every 20 seconds, and attacks happen quickly. Most smishing attacks use cheap website domains that were registered the same day or within days of the attack itself. This does not allow enough time to verify if a site is safe or dangerous, because nearly a million new domains are registered every single day.

Secondly, these blocklists rely largely on reports of fraud by consumers rather than a proactive screening. That is to say, someone must discover how fraudulent the site is the hard way before it can be flagged as such. Even after reporting a suspicious domain, it may be many more days before action is taken. In this span of time, much damage can be done and blocking the website will be far too late for many.

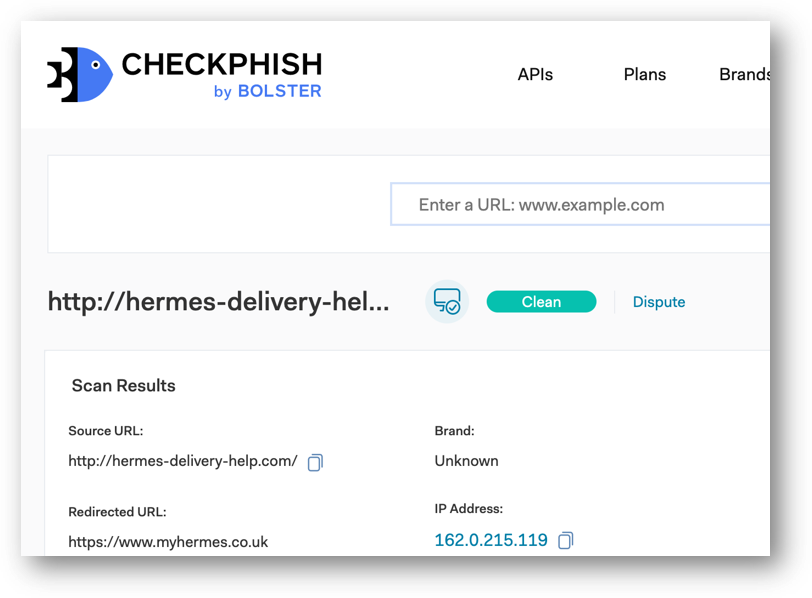

Web domains are not always what they seem. In the Flubot attack, we are seeing attackers use well-known and trusted domains such as google.play.com or github, which would not be found on a blocklist, however the malware is actually hosted and served from the user content section of these websites. This user content is never qualified by the domain owner or anyone else, so the unique URL may itself be malicious, even if the domain is credible and resistant to being blocklisted.

SSL or TLS

SSL (also known as TLS) certificates are a joke. Some SSL certificates can be self-certified in less than a day. The mere presence of “an SSL certificate” is inconsequential, and is in fact, as good as not having one at all. The existence of this certificate does nothing to protect the consumer in this circumstance. They may be more effective on a desktop than a mobile device but in all cases cannot be considered an effective form of either protection or information.

To a consumer, as long as a website has any kind of SSL certificate, they will not receive a warning, and therefore believe they are protected. The problem is that there are different levels of scrutiny for different kinds of SSL certificates. An e-commerce site (which asks for shipping and payment details), and a home decoration blog should not use the same kind of SSL certificate, and yet if they do, the subscriber is none the wiser. Fraudsters can get a DV SSL certificate for their smishing website easily. “The process only requires website owners to prove domain ownership by responding to an email or phone call.”

Education and publicity

Public outreach relies on mobile phone users casually hearing about new threats through news, social media, and other outlets. Operators might even warn subscribers more aggressively through email campaigns or even an SMS campaign. These approaches are scattergun and will not ultimately reach everyone they need to. Proofpoint also found that 72% of adults are not familiar with smishing.

Although operators must take action to warn their subscribers of impending threats, they may be hesitant to draw attention to fraud and security concerns of this sort. Afterall, when the public is taught not to trust links in SMS, that is precisely what they will do. In fact, they begin to distrust SMS overall.

Verified Senders

Google, Mobile Ecostytem ForumEF and others have organised verified sender schemes in which only the brand registered to a sender ID is able to use that sender ID and all other attempts are blocked by the operator. This is a great solution for likely targets such as banks, in that someone cannot spoof and impersonate your bank and land messages in the same thread as your real bank. Unfortunately though, this approach only works if there are no grey routes, SIMboxes, or fraudulent channels into the network, which bypass this verification mechanism.

Thanks to the convoluted nature of how A2P SMS works though, we are all conditioned to receiving real genuine communication from long codes (phone numbers instead of alphanumeric IDs), so many of us would not even bat an eye at receiving bank communication from a strange long code number. Verified sender schemes are a worthwhile and commendable pursuit, but still do not come close to really solving the threat posed by smishing.

Other solutions:

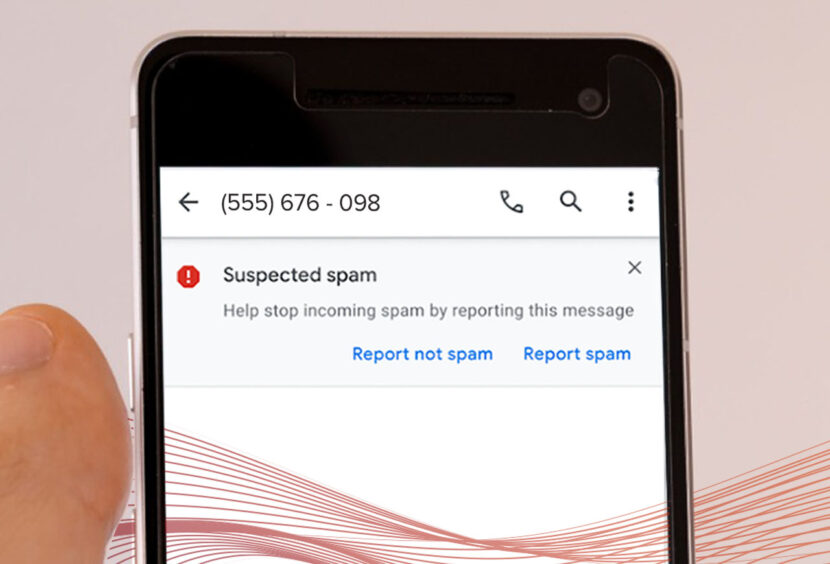

Google includes a mechanism in the SMS feature, to ask customers if the website might be “spam” or not. Reliance on the general public is not accurate, also takes time, and whether it is “spam” or not… the phishing URL remains a problem.

Of course, we have to discuss the merits of AI and machine learning to automatically detect new dangerous domains and new dangerous URLs that are on safe domains. These technologies are great for many reasons, but they need time to learn, and even then it is mathematically impossible to get it right most of the time, let alone all of the time. Attackers only need to get it right once.

More information on the Cellusys SMS Anti-Phishing Solution

How SMS Anti-Phishing works:

The SMS Anti-Phishing Solution can be used in conjunction with an SMS firewall or as a standalone solution for SMS phishing and Flubot attacks.

Every SMS is checked for the presence of a URL. Cellusys authenticates every URL against the Phishing Threat Intelligence Database registry. Even if the URL redirects multiple times across multiple domains, the destination URL will be authenticated:

The subscriber receives the message in one of three ways:

- Verified Safe URLs: Subscriber can open the link

- Potentially Dangerous URLs that cannot be verified: the link is replaced with a redirect link to a warning page explaining why the page is blocked, and urging the subscriber only to open with extreme caution.

- Dangerous URLs that are known: the link is replaced with a redirect link to a warning page explaining why the page has been blocked.

More Information about Fraud Insight solution for Malware

Sources:

https://www.wandera.com/mobile-threat-landscape/

https://www.liquidweb.com/blog/ssl-certificates/-of-ssl-certificate

Tags: Flubot, flubot solution, malware, smishing, trojan, zero trustCategorised in: Blog